|

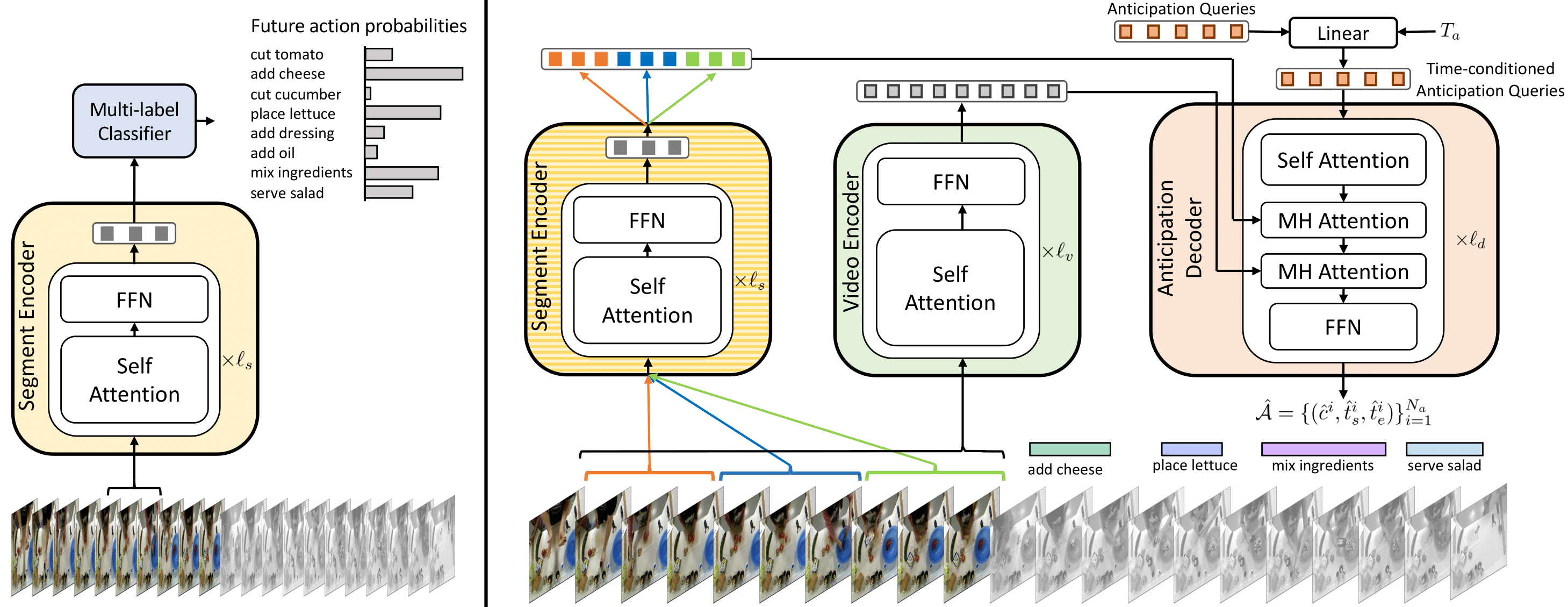

Action anticipation involves predicting future actions having observed the initial portion of a video. Typically, the observed video is processed as a whole to obtain a video-level representation of the ongoing activity in the video, which is then used for future prediction. We introduce ANTICIPATR which performs long-term action anticipation leveraging segment-level representations learned using individual segments from different activities, in addition to a video-level representation. We propose a two-stage learning approach to train a novel transformer-based model that uses these two types of representations to directly predict a set of future action instances over any given anticipation duration. Results on Breakfast, 50Salads, Epic-Kitchens-55, and EGTEA Gaze+ datasets demonstrate the effectiveness of our approach.

|